I have a strong suspicion that Vera’s “clock discipline” is in large part responsible, and I’ve been experimenting with that path for a few weeks. The latest Reactor even got some additional clock sanity checks because of other discoveries I’ve made in the process.

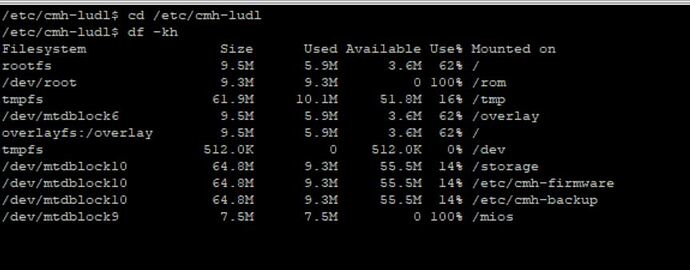

At the moment, I am testing using a local (LAN) time server rather than Vera’s chosen pool of remote servers exclusively. This seems to be bearing some fruit, but I’ll say it’s not entirely conclusive as yet because I’ve been focused on other things and I haven’t done specific, dedicated testing (injecting faults, etc.), I’ve just let it run and see how it goes. But my systems are stable, and my house system in particular seems to have little inclination to reload unless I cause it.

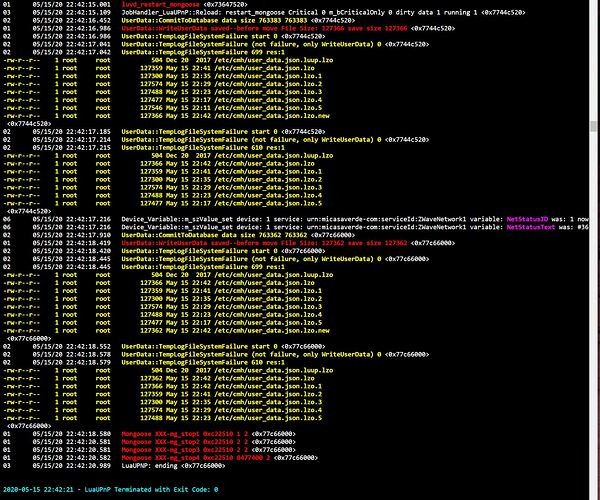

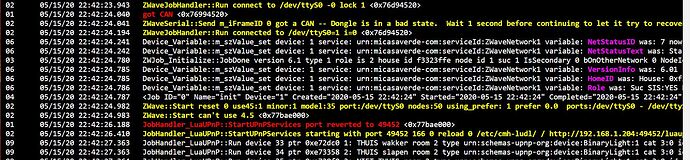

In the specific case that Internet access is down when the system boots up, if the clock cannot be synced to remote servers at that instant, it will be set to a default (fixed, incorrect) time and run until it can. This causes all kinds of problems with any automations that rely on time. But more importantly, when Internet is restored, the unit will sync time—and reload Luup. Consider that the Vera can often boot faster than your “customer premise equipment” can reboot and reconnect to your ISP, so the Vera can often come up before Internet access is up and ready. I have observed that the Edge, especially, has some related issues… the sequence of boot events and the time taken for those events seems to often put it in the position where the start of LuaUPnP precedes the completion of the first setting of the clock.

Additionally, in my prior testing on 7.30 with the Vera team that led to the discovery of the “no-wifi? no-internet? no-LuaUPnP!” bug (also called “Xmas Lights” because of its visible symptoms on the unit LEDs), I discovered that if an Internet outage lasts more than about an hour, Vera reloads unconditionally when Internet access is restored. This appears to be a behavior of separate monitoring subsystems (the infamous NetworkMonitor) from the Xmas Lights problem, so I suspect that although Xmas LIghts was fixed, the long-outage reload behavior still persists in 7.31.

It’s also the case that Vera uses several “well known” targets to determine if Internet is up or down; and at least on my system, among these are sites that are blocked in some geopolitical regions. If some of those targets are blocked, the pool of available targets goes down, making the test potentially more sensitive than it should be. I’m not sure how they adjust the targets based on region, but they should (must). It would be educational to have someone in the EU or Asia look at the NetworkMonitor’s log file and report what servers it is attempting to ping. I note in my log that one of the servers it attempts to hit at startup is “test.mios.com” and this reports as unresolvable, so right off, we’re off to a potentially bad start.

I think these are among the things, likely just a few of many more yet to discover, that lead to the fragility @therealdb asserts.

And to think that much of this could be avoided with a few cents worth of parts (a battery-backed hardware real time clock). IMO, no system used for home automation should be without it.